The Peer-to-Peer Review was introduced as a stage of the application process between assessing the basic eligibility of applicants—an administrative review—and the judging of applications by the Wise Head Panel. A systematic review of the process, including feedback from applicants and an analysis of results, suggests that, not only does the Peer-to-Peer Review prove valuable to participating applicants, it is also a valid and meaningful mechanism for evaluation.

Although its main intent is to scale the screening of proposals, the Peer-to-Peer Review process benefits applicants in three ways. First, it affords applicants a greater understanding of the scoring rubric and competition criteria, as they actively review other proposals. Second, it provides applicants insights into other ideas and teams and, consequentially, an opportunity to identify new potential partners. And, lastly, by pairing the Peer-to-Peer Review with our Organizational Readiness Tool, it increases the proportion of proposals that advance beyond the administrative review process and receive feedback on their work. An initial analysis revealed that, with the addition of the Peer-to-Peer Review, 63% of all submitted applications received substantial feedback, as compared to just 42% in the first round of 100&Change.

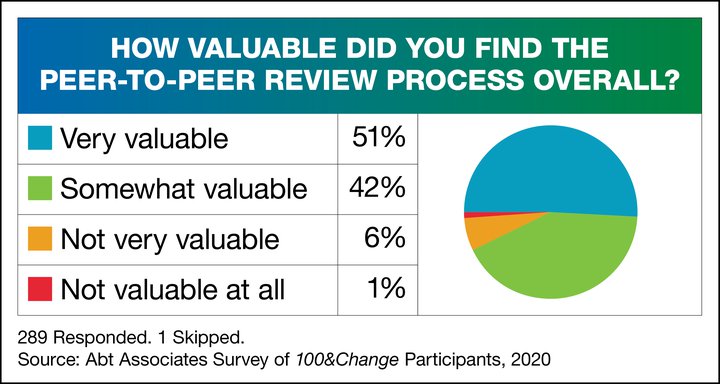

A survey of 100&Change participants showed the majority (51%) of respondents found the process to be “very valuable,” and most (93%) rated it at least “somewhat valuable.”

In their written comments [quotes were collected anonymously from 100&Change survey respondents], applicants emphasized the value both in receiving feedback from peers and in reviewing applications themselves. Applicants noted that peer review resulted in feedback that “will help…in future applications as well as editing [the] proposal moving forward.” Another respondent noted that the Peer-to-Peer Review “confirmed some of the remaining questions and gaps that we had in the program design and offered good suggestions on how to address them.” The process also helped applicants hone their message as they “continue to refine [the] project and ensure that [they] are communicating our vision in a compelling way.”

Applicants also appreciated the opportunity to review other organizations’ proposals. Applicants valued being able to “see how other organizations think and package their work.” Some applicants discussed the ways in which reviewing other applications deepened their understanding of the competition’s evaluation system and gave them insights into “how [they] can improve [their] proposal in future rounds of…100&Change.” For some, engaging with the scoring rubric as an evaluator resulted in “practically and deeply understanding…how [their] project was evaluated.”

Applicants’ feedback indicated that the Peer-to-Peer Review process served as an “inspiring” opportunity to learn about and from other organizations and to get a sense of the “‘Big Ideas’ that other organizations are developing.” Though this initial survey did not ask whether organizations had forged new partnerships as a result of the process, given applicants’ feedback, we are optimistic that the Peer-to-Peer Review prompted applicants to “[learn] about new ideas [and think] about collaboration.”

“The Peer-to-Peer review leaves one with greater knowledge and warm feelings toward other applicants.”

The feedback collected from 100&Change applicants suggested that the Peer-to-Peer Review provided respondents with a more nuanced understanding of the competition scoring rubric and criteria, as well as insights into other teams and ideas. We also hoped to get a sense of the validity of the process as an evaluation tool. To this end, our evaluation partners, Abt Associates, examined the rankings by quartile to see whether the rankings determined by Peer-to-Peer Review were correlated with the rankings resulting from the Wise Head Panel. This analysis showed that the Peer-to-Peer and Wise Head reviews were likely to identify the same highest- and lowest-scoring (top and bottom quartile) applicants, suggesting that the groups were similarly effective evaluators.

As a result, the 100&Change selection committee received two sets of valuable feedback to consider in its decision-making process, that was, for the most part, aligned. The Peer-to-Peer Review process also ensured that as many applicants as possible received meaningful and valid feedback on their proposals during the competition.

Addressing Applicant Concerns

It is worth noting that applicant feedback was not positive across the board: a few respondents raised concerns that peer reviews would be inherently biased. “Although receiving the feedback was helpful in some aspects, we have concerns about the peer review process, as applicants are evaluating other applicants’ work that they are competing against, which could introduce bias into the reviews.”

However, the strong correlation between the Peer-to-Peer scoring and the Wise Head scoring suggests that peers provided fair assessments of projects. To further address this concern, we reviewed the peer comments and scores, finding no evidence that peers gave undeservedly low scores to potential competitors.

Some respondents also expressed concern about our administration of the Peer-to Peer Review. This was due to some confusion created by our team with regard to the required number of peer-to-peer reviews and how the reviews would be assigned. These are problems that can and will be fixed, as we plan to set clearer expectations and provide more frequent communication in advance of the peer review stage of future competitions.

With this feedback, we will continue to include a Peer-to-Peer Review in all Lever for Change competitions. While the initial feedback we received on the Peer-to-Peer process from 100&Change participants is heartening, we will also continue to conduct systematic assessments of each competition. We are hopeful that the process will continue to provide meaningful evaluation and “fresh, objective feedback.” We look forward to sharing our progress with you soon. In the meantime, please contact us if you have questions or suggestions.